Meta to add Child-focused Safety Features to Instagram

Meta is rolling out safety features for Instagram accounts of children that are managed by adults. Although Instagram does not allow children under 13 to have their own accounts, parents and managers can still operate profiles on their behalf. Previously, Meta had introduced privacy focused features specifically for teen users. Now, some of these protections are being extended to accounts showcasing children.

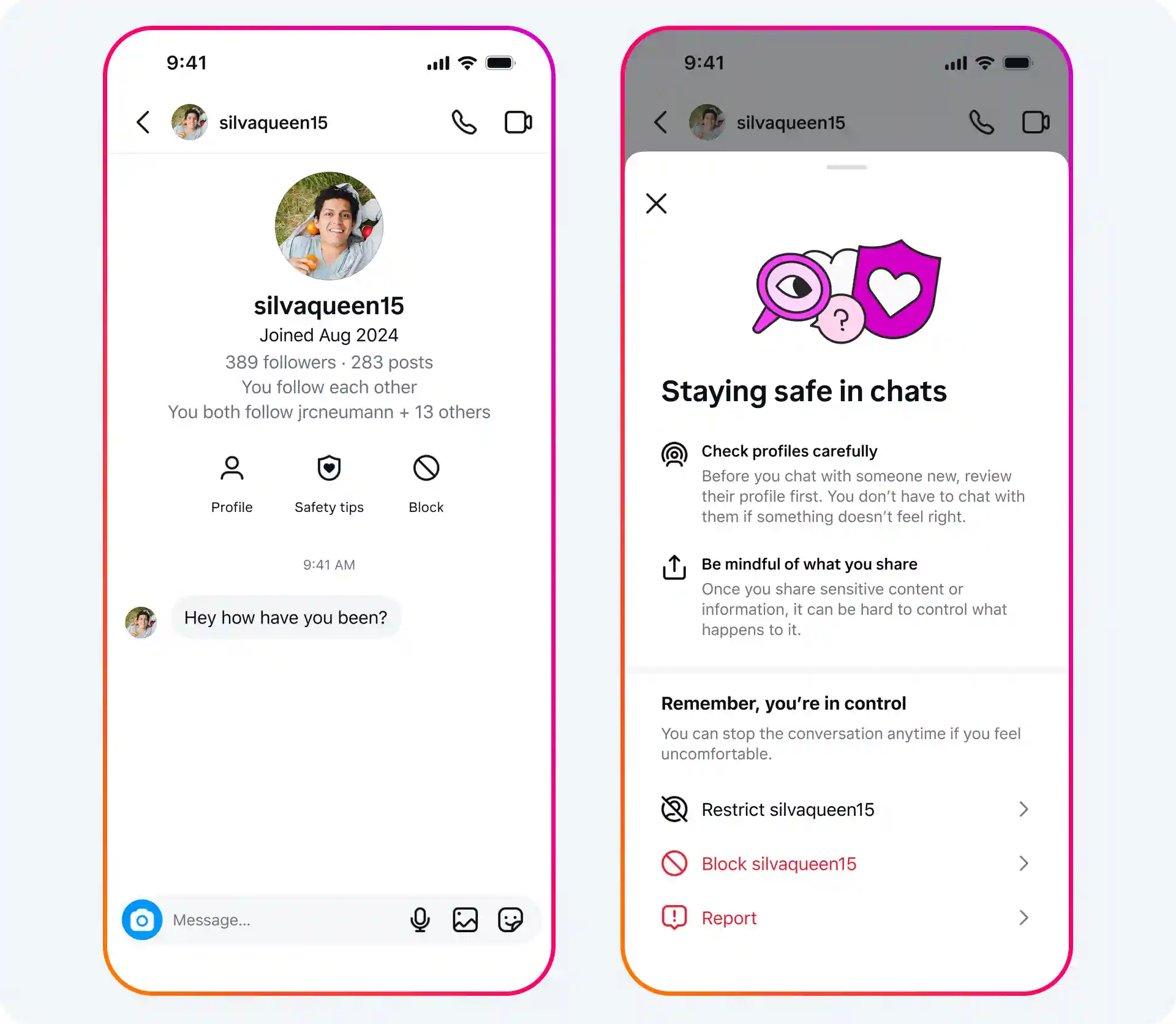

The key updates include that strict message settings will now block unwanted direct messages. The Hidden Words tool will be turned on by default to filter certain comments. Accounts blocked by teens won’t be recommended again. Users will have a harder time finding these accounts in search, and their comments will be hidden.

This year, they took down 135,000 Instagram accounts for inappropriate messages and 500,000 more linked profiles across Instagram and Facebook. Meta also continues to encourage safe interactions by bringing more resources to teens, including safety tips and nudity protection features in DMs that blur explicit images. These features will be automatically available to eligible accounts worldwide in the coming months as part of Meta’s ongoing child and teen protection efforts on its platforms.